10th Annual State of the Software Supply Chain®

10th Annual State of the Software Supply Chain Report

Optimizing Efficiency & Reducing Waste

This year we estimate open source downloads to be over 6.6 trillion — the scale of open source is unfathomable. We also know that commercial state-of-the-art software is built from as much as 90% open source code, including hundreds of discrete libraries in a single application. While use of OSS accelerates application development cycles and reduces expenses, it also introduces threat vectors in the form of vulnerabilities and intellectual property (IP) risk from restrictive and reciprocal licenses.

Managing these OSS risks in DevOps organizations, with any type of success, must involve efficient security policies and practices that are capable of keeping pace with the evolution and addition of new OSS libraries in the accelerated development environment leading to rapidly changing risk profile.

Previously we talked about the persistent risk and how open source consumption factors into creating that risk. We also talked about complacency within dependency management — and found that 80% of enterprise application dependencies were not upgraded within a year. We also know from past analysis that of those versions that do get upgraded, 69% had a better choice. And, that 95% of all vulnerable versions used to begin with had a non-vulnerable fix available and 62% of consumers used an avoidable vulnerable version.

62%

of open source consumers used an avoidable vulnerable component version

These sobering statistics led us to where we are now — diving deep into how organizations can change their consumption behaviors to optimize risk mitigation efforts and reduce waste, especially waste that might occur in targeting lower priority risks.

Size Doesn’t Matter:

All Applications Have Sizable Risk

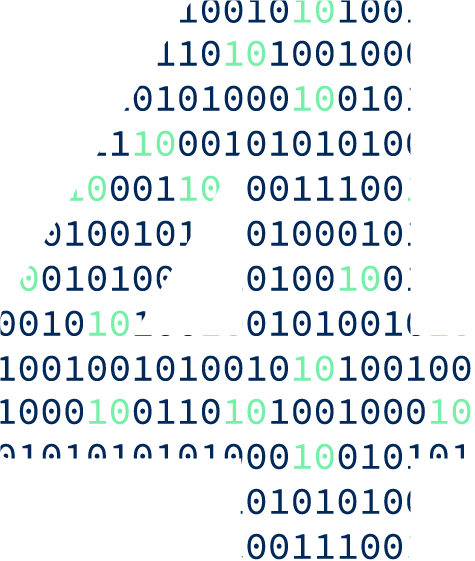

Figure 4.1 Average Number of Packages Per Application

This chart shows the distribution of application dependency size. Small: up to 25 dependencies; Medium: 26 to 150 dependencies; Large: 151 to 400 dependencies; X-Large: 401 or more dependencies.

The average application has around 180 open source components — that’s an increase from around 150 from which we found last year. All of these packages, when left unmanaged can be a source of risk and as we saw in previous chapters of this report — that risk is only growing. It won’t be if you get breached, but when.

There is no denying the data shows the larger the application, the larger the risk. It should come as no surprise that as application size grows, so does the number of dependencies. The sheer size of the code base and complexity of large applications makes it harder to manage. As a result, organizations need more time to remediate the vulnerabilities. The more time you take, the higher the risk.

However, it became abundantly clear that there is no ‘small’ application that is trivial to manage. Further, our data shows that most applications are in fact large applications — around 40%. So, there is no organization that doesn’t have to contend with this problem.

No matter the size of an application — whether you only have 25 dependencies or you have 400 or 800 dependencies (which is not abnormal) — it is an unmanageable manual workload. You can and must gain efficiency across all applications regardless of their size, especially as the industry moves more towards microservices and modularizing applications, which will mean smaller applications. Optimizing management of 1,000 small applications is just as beneficial as optimizing 1 large application.

So, how do enterprises get a handle on this massive issue that is not only causing increasing risk to them and their customers, but is also wasting an incredible amount of time? We must first understand two interrelated key concepts:

Efficiency Hurdle: Development time is limited with little or no allocation in schedules for remediation tasks or dependency upgrade research. Stopping builds and slowing down pipelines to review risks due to vulnerabilities manually is impractical and goes against the flow of DevOps, frustrating teams as a result. It also frustrates developers, causing intense friction, which is why organizations must prioritize reducing waste.

Reduce Waste: The efficiency hurdle is a solvable problem. Enterprises can create efficiency and thus reduce waste, by optimizing remediation via a combined approach of an enterprise-scale SCA tool, highly accurate component intelligence, and effective dependency management practices.

Stop Wasting Developer Time:

What to Look for in an SCA Tool

Integrate for effective but non intrusive software composition analysis

Fixing vulnerabilities is a huge time drain on development cycles. It will be faster if vulnerability detection is integrated in development environments or CI/CD pipelines. The right tool will provide context for the expected functionality of the component, so developers can make informed decisions on deciding the best version to use in real-time.

We’ve all now heard the concept of Shifting Left or moving the remediation as close to the beginning of the development cycle. While we still agree with this, we’ve found you must go even further — you must review dependences on a continuous basis, there is no beginning or end. Reviewing dependencies and remediation needs to be incorporated into the regular flow of development, shifting it into development rather than at ‘test’ or ‘release’ time. But, to be successful it needs to be much more efficient than it is now, since it’s now being done more frequently and can disrupt the development pace. This is the only way to reduce downstream and upstream effects, rework and wasting developer time.

You must review dependencies on a continuous basis, there is no beginning or end.

For an SCA tool to be successful, it must be integrated within the CI/CD pipelines and provide context for the expected functionality of the component, so developers can make informed decisions on deciding the best version to use. If your tool does this, you’re one step closer to reducing waste.

Demand High-Quality Open Source Component Intelligence

Reliable component intelligence is the foundation of efficient risk remediation and dependency management. Component intelligence depends upon the quality of the underlying vulnerability data.

There are two main contributing factors of high quality vulnerability data to look for:

- Scoring the vulnerabilities in a consistent and repeatable manner, inline with industry standards

- Comprehensive coverage of the correlation to libraries and versions affected by the vulnerability

Figure 4.2 Corrected Version Information

needed correction after security research review

Source: Sonatype

This pie chart shows that 92% of public vulnerability information had a version correction after deeper review

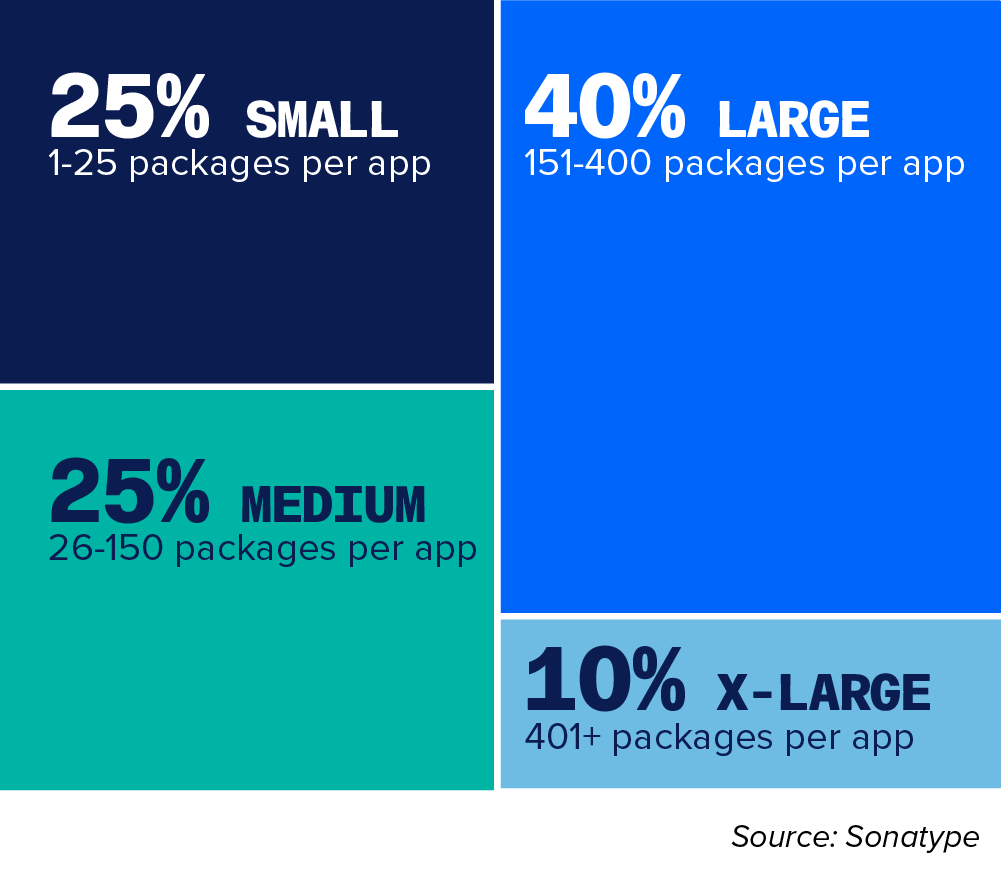

Figure 4.3 Score Corrected Aggregations

This bar chart depicts public vulnerability score corrections by score severity, 10 through 1

While public data is often accurate for a single version, it tends to be wrong when it comes to multiple versions — usually because the version range is incorrect. This creates a false sense of security, as you might assume, “the version I’m using isn’t affected.” But that’s often not true; the security researcher simply didn’t review all versions thoroughly. It’s important to understand that while the version mentioned in a public advisory is typically correct, many other versions haven’t been reviewed or validated.

We dug deeper on accuracy of scoring and found that 69% of vulnerabilities that were initially scored below 7 were corrected to 7 or higher, and 16.5% were corrected to 9 and higher. This creates what we’re calling surprise risk and a false sense of comfort that you’re not at risk.

Public data is often inaccurate when it comes to multiple versions, creating surprise risk and a false sense of comfort that you’re not at risk.

To reiterate, incorrectly scored low vulnerabilities lead to emergency reactive work when the true threat is realized. The surprise reactive work and surprise risk negatively impacts the flow of development, leading to inefficiency, in addition to a false sense of security that could result in a breach or service interruption. Vulnerabilities detected after a serious breach or incident demand a higher resolution time, in addition to the lack of trust and endangering lives, in extreme cases.

The converse is incorrectly scored high vulnerabilities which diverts development capacity to remediate, taking away precious time that could be spent on true high priority vulnerabilities that could lead to serious impacts.

It must be emphasized that the component intelligence built into your SCA tool must give accurate vulnerability data and avoid wasting development capacity, by targeting remediation efforts on high priority vulnerabilities.

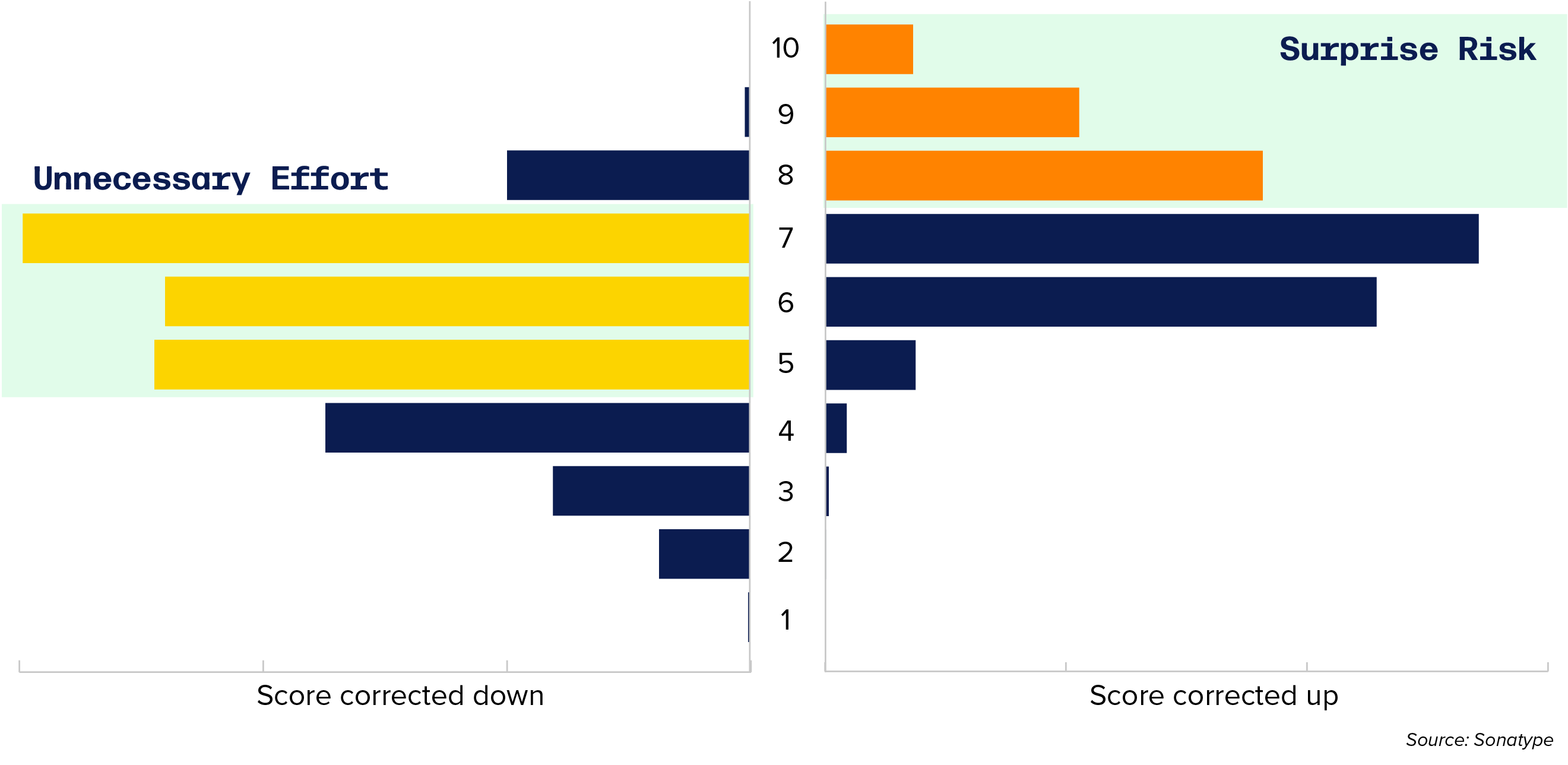

Comprehensive Ecosystem Support

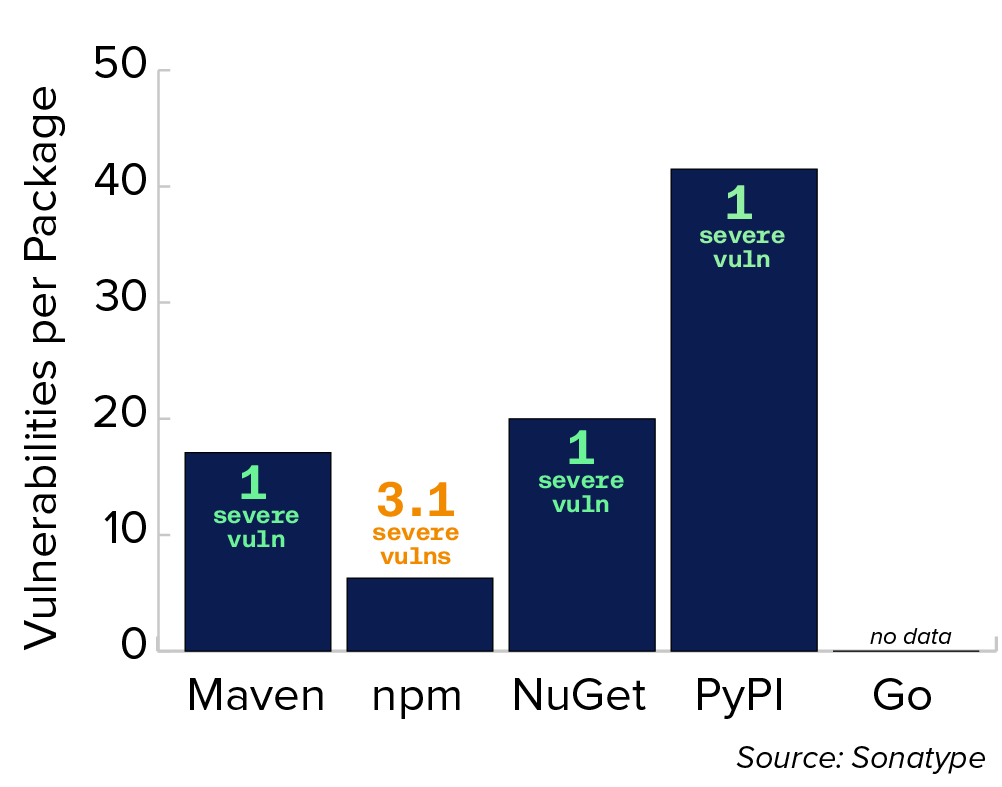

Different ecosystems have a different number of dependencies and could directly affect the size of your application. A general perception is that Java and JavaScript have a lot of dependencies, while other ecosystems are more manageable. This could cause complacency among the developers using non-Java ecosystems, based on the false understanding that fewer dependencies mean fewer vulnerabilities or easier to manage.

Our data actually shows that the PyPI ecosystem (the Python ecosystem which tends to have low dependencies) has more vulnerabilities per package as compared to other ecosystems. Enterprises cannot rest on using “low-dependency” languages because even when you think you’re using a low dependency — or lower average number of components — you’re still very much at risk and need to practice efficient dependency management, thus you need a good SCA tool that covers a wide breadth of ecosystems.

Further, most enterprises are using more than one ecosystem within their application portfolio, underscoring the importance of having an SCA tool that supports comprehensive ecosystems.

Figure 4.4 Average Number of Components (Packages) Per Application, by Ecosystem

This bar chart shows the average number of ecosystem packages used by an application, covering Maven, npm, NuGet, PyPI, and Go.

Figure 4.5 Average Number of Vulnerabilities in Top 10 Most Popular Packages, by Ecosystem

This bar chart shows the average vulnerability counts for the top 10 most popular packages by ecosystem (Maven, npm, NuGet, PyPI, and Go), along with the number of severe vulnerabilities.

Mature Dependency Management Workflows

Risk based prioritization is essential to minimize the time spent on remediating vulnerabilities. There are several approaches to how an organization can assess and prioritize risks to optimize the remediation process. Some of them include:

- Performing Reachability Analysis to determine what actual components in the dependency chain are being called by the applications and are vulnerable.

- Assessing risks due to vulnerabilities that are exploitable in the runtime environment of the application.

Reachability Analysis is an optimization approach to achieving a near-zero risk scenario in a limited amount of time. Reachability involves detecting vulnerable method signatures in the execution paths of an application (call graph), regardless of whether it is directly called from the application or through other OSS libraries. Teams can target their remediation efforts towards these reached vulnerabilities.

However, the effectiveness of this kind of prioritization greatly depends on a combination of the following factors:

- The accuracy of the call graph generated

- The accuracy of the CVE scores being targeted for remediation (see the importance quality data)

- CWE (determination of the impact if the vulnerability manifests itself in an exploitable manner)

Targeting remediation of only vulnerabilities detected after Reachability Analysis, having high CVE scores only (9 or 10) without considering the CWEs could create a false sense of security.

All declared vulnerabilities may not manifest themselves as exploitable in a given runtime environment. An application’s runtime environment (public SaaS, distributed for customers to run and operate, having access to sensitive information etc.) could be determinant in the priority of its remediation. Knowledge of declared CWE and its accuracy, including a thorough analysis of base level weaknesses, variant weaknesses and composite weaknesses (a set of weaknesses that are reachable consecutively in order to produce an exploitable vulnerability), is a key factor to avoiding such risks.

EXAMPLES:

- Network exploits could occur only if the application is meant to run on public WAN/LAN or Internet.

- Applications processing sensitive data such as PII, classified information, and healthcare data are at risk of accidental exposure due compromised network security or malicious attempts to gain access.

Aligned with Cybersecurity Compliance Requirements

For organizations serving the federal sector, or serving other organizations that support the federal sector, maintaining an optimal security posture is a hard requirement to stay in compliance with FISMA policies. This is achieved by remediating all “high” and “critical” vulnerabilities in the production environment.

Features like continuous monitoring and reporting offered by SCA tools provide real-time insights into the severity of vulnerabilities, as they are discovered at various stages of the development cycle. Developers can target “high” and “critical” vulnerabilities and avoid spending time remediating others to stay in compliance.

Open Source License Risk Profile

Generally, licensing tends to lie outside of developer or security teams interest. Swept away by the creative and innovation waves, developers use the latest and most popular components available to stay ahead of the curve. Neglecting open source licenses (based on assumptions that it is open source and free to use) is a huge business risk. With laws and litigations coming in later, organizations could get into years of dispute and suffer financial setbacks involving fines and loss of revenue.

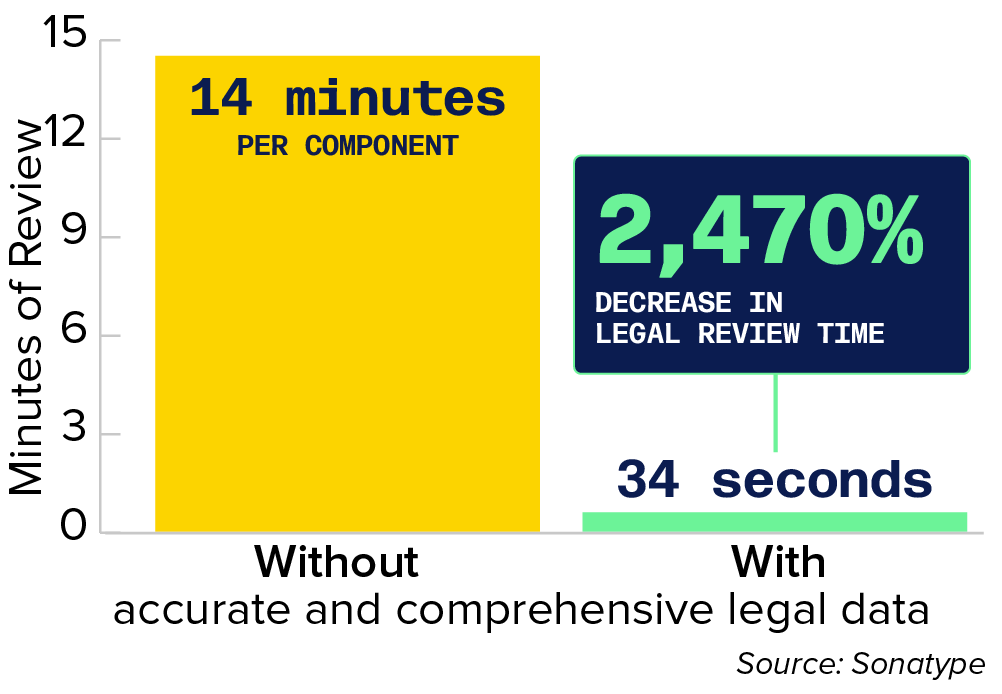

Open source licensing issues, if investigated at all, will generally not show up before release cycles due to the effort involved. In the absence of an SCA tool, the process to review OSS licenses is manual and time consuming. It could involve reviews done by legal teams, which puts drag on external teams and resources. As a common practice, most organizations conduct OSS license compliance reviews once, just before a production release to save resources, which is very late in the development cycle and can ultimately create more waste.

95.56M

Total releases with a license

Licenses Can Change from Version to Version

A typical open source project has an overarching license, which might not apply to all individual files under the project. As contributions to an open source project increase, individual pieces of code can have different licenses, which could impact the project downstream.

Figure 4.6 Projects with One or More License Changes

6%

of projects had one or more license change

Source: Sonatype

This pie chart shows the percentage of projects that had 1 or more license changes through the version history

Some vendor owned open source projects can also be relicensed to restrict usage or better control, for example, Terraform(previously Mozilla Public License v2.0 changed to Business Source License (BSL) v1.1, ElasticSearch (previously Apache2.0 License changed to non-open source dual license based on SSPL, Redis (previously Berkeley Software Distribution (BSD) License changed to Redis Source Available License).

The BSL license also gives the vendor the right to change license further down the road with short or no notice.

This data depicts the magnitude of license changes tracked for multiple projects.

Although the overall license changes appear to be 6% of all release versions, the remediation tasks being more manual in nature could set release dates back unexpectedly. Reviewing candidate upgrade versions requires manual checks, causing delays and no upgrades at all, in some cases.

An SCA tool with a built-in license feature and accurate OSS legal compliance database can identify potential compliance and legal issues immediately — decreasing review time by 2,470%.

Teams can detect license changes which can occur from version to version for a component by reviewing the SCA reports. If configured correctly, it can detect license changes early in the development cycle, allowing sufficient time for linked manual remediation processes (escalate, find forked projects with non-restrictive licenses or adapt usage of commercially available licenses.)

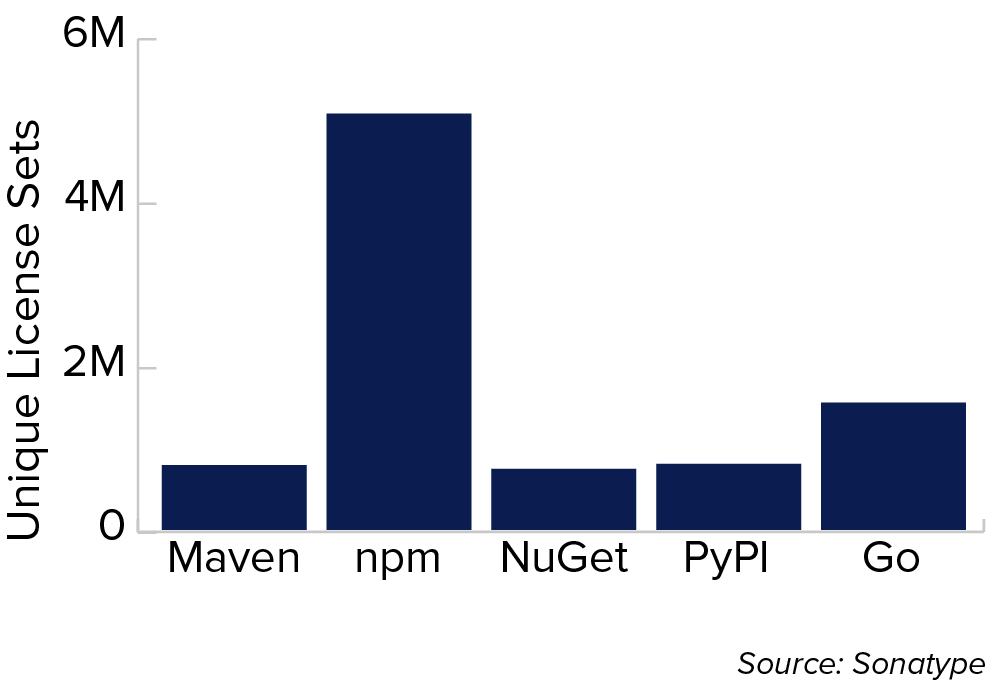

Figure 4.7 Unique License Sets per Project

This bar chart shows the sum of the unique licenses sets per project (not including the first license set) by ecosystem: Maven, npm, NuGet, PyPI, Go).

Figure 4.8 Open Source Compliance Legal Review Time

This bar chart illustrates efficiency gains in legal review time by comparing duration without and with accurate and comprehensive legal data.

Why Dependency Management Needs to be Much More than “Just Update to the Latest Version”

Simply put, the latest version of a component may not be the best version to use. A common practice for avoiding known security issues is to upgrade to the latest version of a component, to the point where this upgrade step is often automated. There is the possibility of the license being more restrictive than the currently used versions license, introducing new business risks.

It can allow setting context-sensitive license policies that are in compliance with the application context, and flag violations within the development cycle.

Since many OSS licenses come into effect based on the application’s production environment (distributed, hosted, or internal), compliance issues may not arise until a release. An SCA tool can allow setting license policies to flag non-compliance at various stages in the SDLC (pre-release or release.)

Backed by accurate and trusted OSS license data, organizations can review attribution reports, and review license obligations to stay compliant.

Next: Best Practices in Software Supply Chain Management

See Next Chapter