CVE-2024-3094 The targeted backdoor supply chain attack against XZ and liblzma

12 minute read time

As sure as long weekends arrive in the western world, so too does news of new software supply chain attacks. The easter bank holidays were no exception, with the discovery of a targeted attack against the popular XZ compression utility seen in many Linux distributions such as fedora, Debian to name a few.

The Sonatype team was alerted Friday (March 29, 2024) with the rest of the world as this attack was uncovered by a curious developer who noticed that their ssh login was taking 500ms instead of 100ms.

We think this is one of the more complicated benevolent stranger malware injections to date, and deserves amplification. This post is to discuss all the elements that have been discovered over the weekend and give our stance on this incident.

The practical end result is that the world now has another patching effort in front of them: to discover which systems are affected by the bad packages, and to upgrade to a known good version, which currently is understood to be anything below 5.6.0. The malicious code seems to have only been distributed in the operating system packages, and not present in the java-xz package. This may change as more research is performed.

The situation is still developing, and the malware attacker's contribution history is being studied. As we find out more we will relay information about the package here.

What is the XZ package and what does it do?

To put it shortly, the XZ utility and it's associated library are Linux utilities, much like bzip or gzip. It handles data compression. It has gained popularity over the years and is commonly used for this purpose. Compression is useful in many use cases, and is often seen in critical procedures performed by the operating system. This is speculated to be the reason why the assailant chose this project specifically as their target.

Who is affected by the backdoor?

The good news is that no Java software dependencies are affected, according to our research and as discussed by the Apache foundation. Although there is a xz distribution in Java under org.tukaani.xz, the last release was 3 years ago, and all subsequent commits have been done by the original maintainer and can be considered safe.

This means operating systems that distribute the xz package and liblzma which is installed with it should be considered affected. The affected versions are 5.6.0 and 5.6.1 - both released in the last few months, now pulled from all the distro package registries. Any system with these two versions still installed should be considered compromised.

When considering what to patch here, operating systems mean not just Linux boxes, but also containers and any development machine that may use tools like homebrew. So far at least Fedora, Kali, and some unstable releases of Debian have been confirmed to spread the malware.

What is the malicious code in xz?

The malware targets the sshd process, commonly used to facilitate remote access in Linux servers. It allows the attacker to essentially bypass the entire ssh authentication process - and thus gain access to the server. It needs to run on a system that uses glibc and most likely on something that uses .deb or .rpm packages.

The attack actually uses the IFUNC call provided by glibc to bypass system calls for encryption under these specific circumstances. One of the most published effects is that it bypasses the ssh daemon authentication procedure, allowing unrestricted access. Since the code is encrypted, it performs slowly which is why it was discovered.

There are good deep dives into the technical details of this issue that can be found:

Essentially, any unix machine, including macOS based personal devices, on which any version of xz or liblzma is installed should be considered backdoored and needs to be remediated. You should be prioritizing systems in production with public facing ssh connection ability.

How did the backdoor get merged?

What makes this attack stand out is that the assailant followed the benevolent stranger attack playbook nearly to the letter. This is very similar in motivations and vector to the attacks performed against 3CX or Solarwinds, just played out longer term. Thus, this is definitely a supply chain attack, but probably the most elaborate social engineering project that we have seen.

Firstly, the XZ project has been maintained by a sole developer for almost two decades. It is liked, moderately popular, and underserved at least to the apparent community needs. In 2022, the project maintainer began getting pressure from suspected sock puppet accounts about their lack of speed of applying updates.

This type of behavior is not uncommon in open source projects that get popular - maintainers are inundated with requests for work without the requester actually helping. This type of dynamic has led to some authors to protest within their software - like in the case of the famous colors and faker packages where the author deliberately made their software publish protest messages about their free labour. The older a project gets without volunteers, the more likely it is to be subject to security weaknesses and vulnerabilities.

In the case of xz, the maintainer expressed the project is their hobby, and would welcome another maintainer onboard. One such maintainer appeared, Jia Tan. With an account with a short history, they approached the maintainer with initially innocent patches and began contributing code. With the benefit of hindsight, it is now evident this was social engineering performed to ratchet up the pressure for the maintainer to relent. As is standard in open source, when you pick up the shovel you begin to gain trust. Over time, Jia Tan became more and more trusted.

Once Jia gained the trust of the maintainer, they began suggesting and adding features. Jia Tan also advocated for the xz project's adoption downstream into Linux distributions due to its now advanced features - again a very normal and expected activity. With the benefit of hindsight though, the timeline of these activities is extremely accelerated when considering normal open source project contributor journeys. They went from contributor to signing releases in a course of a year.

Over the next 24 months, they would add encrypted code that started working in stages. The malicious code was hidden in two binary test files embedded within the source code. Normally this would be alarming, but this was a compression package after all, meaning binary test files are not beyond the norm, allaying any doubts, so all malware was hidden in plain sight. It was partially this compression that caused the slowdown observed by the developer later on. More on these below.

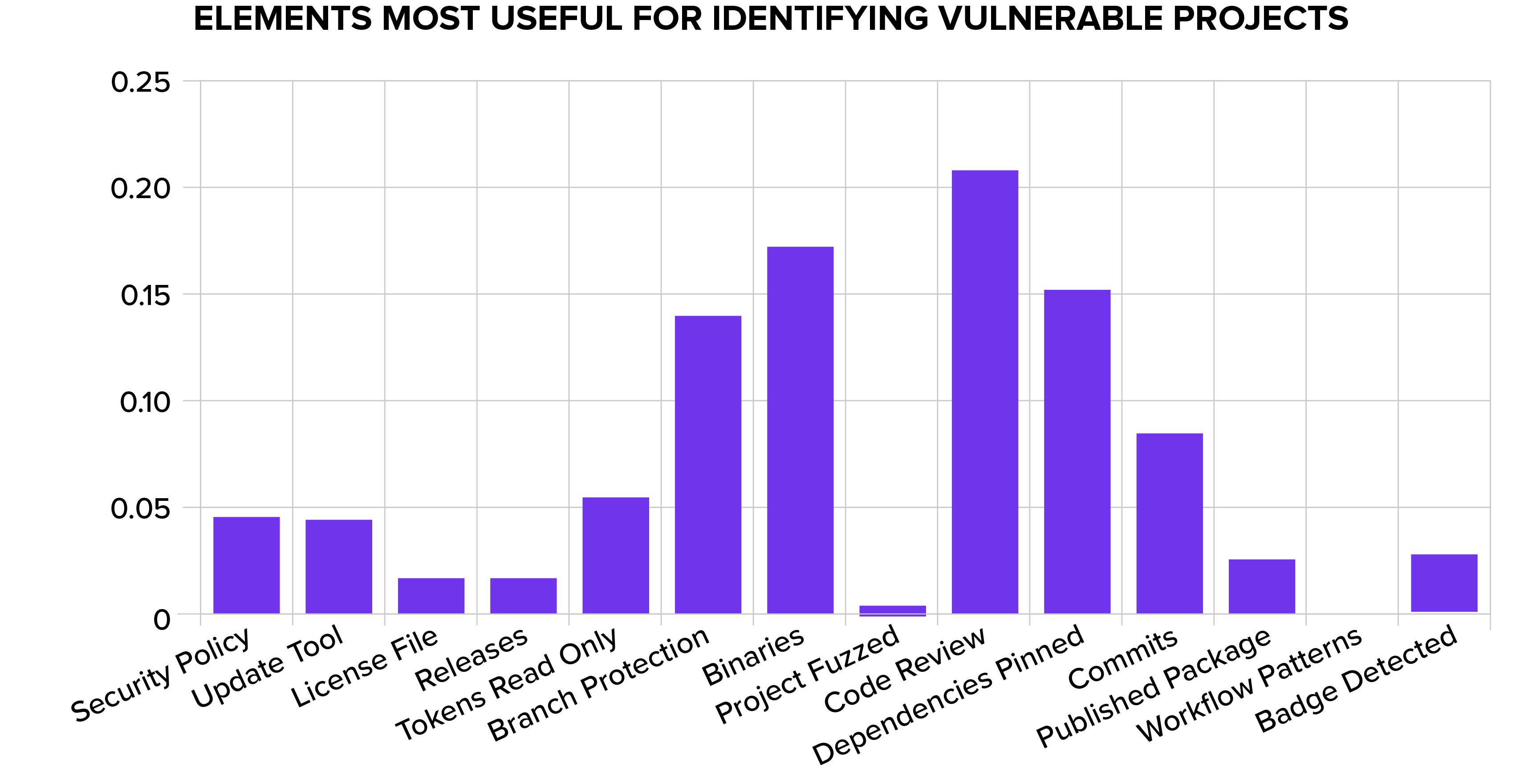

Though everything seemed above board and would have passed any human sniff test, it is worth mentioning that in our past modeling of what projects are most likely to contain security vulnerabilities, we have found that binary files are one of the largest indicator of a security vulnerability being present.

How was it discovered?

The malware was discovered by a Microsoft developer wondering why their ssh connections over a period of time began slowing down. As they investigated the issue they came to realize the sideloaded nature of the xz utility. This caused them to raise the alarm, which ultimately resulted in the package being pulled out and the current incident being raised.

How do I mitigate the issue?

You should aim to understand every affected system you control and which ones have the affected packages installed. This will include not just virtual machines, but also containers based on any Linux distro.

Best practice to help with this effort is to generate a SBOM or a System BOM of all of your containers and virtual machines and monitor them for any new findings. In this regard, Sonatype Container can be of help by auditing your container package indexes for any known bad package.

It's worthwhile to prioritize any infrastructure that has public facing interfaces but not forget about other privileged hardware such as developer machines or internal build infrastructure that may be of high value target potential.

It also is evident that information about this issue will continue to arrive as researchers deep dive into this code. The situation over time may change as the assailant worked on the code for a period of two years. It's prudent to expect further changes in known safe versions.

Why did this happen?

A lot of perspectives have been raised about this attack - the maintainer was a sole unpaid enthusiast. As they began trusting the attacker more they began letting patches through without oversight, even when they didn't actually fix issues they claimed to. It would be completely wrong to blame the original maintainer for the lack of any such oversight - it is after all their hobby project, something they did on the side out of passion. In fact, they were taking a break from contributing altogether at the time of this incident. They already were the sole torchbearer of a larger community decades ago.

With the benefit of hindsight, it is safe to say the attackers chose their target carefully. It was a critical project, large adoption, under pressure. When put together with the sequence of evidence, it is easy to conclude that this was a deliberate operation from beginning to end.

What could have been done to avoid falling foul of this lure? Not much I’m afraid. Some of the commentary raises a consistent criticism that the industry should do more to support these kinds of critical projects. The grim thought many have had is how many other projects such as xz are susceptible to this type of operation? Much like underinvestment in physical infrastructure in the form of highways or water utilities, there is a significant portion of the digital infrastructure that has seen their old maintainers retire and no new ones emerging to take the mantle. The reasons for this are manyfold and deserve future examination, but needless to say, xz is not a unique project in this regard.

By a coincidence this hobby project became part of critical infrastructure and nearly became universally adopted. This phenomenon is not unique to the xz project or any other open source for that matter. Due to the network effect, any piece of code can transform from a hobby to a universally leveraged piece of critical infrastructure. Adopting code takes little effort and so it is done in the name of efficiency, which is extremely useful for the incentives most of us have - to ship software out as fast as possible with as good of a quality as possible.

This certainly means on one level that to minimize the risk posed by such attacks is to begin contributing back to open source software you depend upon for your business.

From a systems perspective, the reality is that a lot of our IT infrastructure is beholden to a deep supply chain consisting of components just like this - relying upon countless open source projects. Because of this, according to one study, replacing open source altogether would 3x an organization's software development spend instantly.

This fact of digital nature is not lost on adversaries and attackers - nation states and otherwise. The long game the attacker played suggests deep pockets, pointing the finger to an intelligence operation. The desired outcome of such an operation would certainly have been the complete backdooring of most digital infrastructure, and we were possibly mere months away from this plan completing.

This is not the first such attack - on the application level we have observed many such incidents ranging from manufactured several crypto heists, open source hijacks, to backdoored releases of other popular packages.

The lesson we absolutely should not take from all this is that open source should be considered unsafe or minimize its usage. Far from it, the open nature of the project allowed this attack to be uncovered in the first place.

What should happen instead is that organizations should have a cold hard look at their risk models when it comes to open source. The reality is that when you adopt technology it comes with a commitment to keep it maintained, and the risk of adverse realization rests solely on the shoulders of the organization adopting the code - not the maintainers of it. The only mitigation to such risk is to identify the affected systems quickly, and to jettison the malicious or vulnerable code as fast as possible.

This combined by genuinely effort in replenishing the pool of maintainers for such projects, through dedicating time and energy and resources are the only way to sustain what is already out there. CISA and OpenSSF have initiatives towards this end, but more is needed from everyone if the aim is to succeed.

Practical steps to minimize future incident impacts

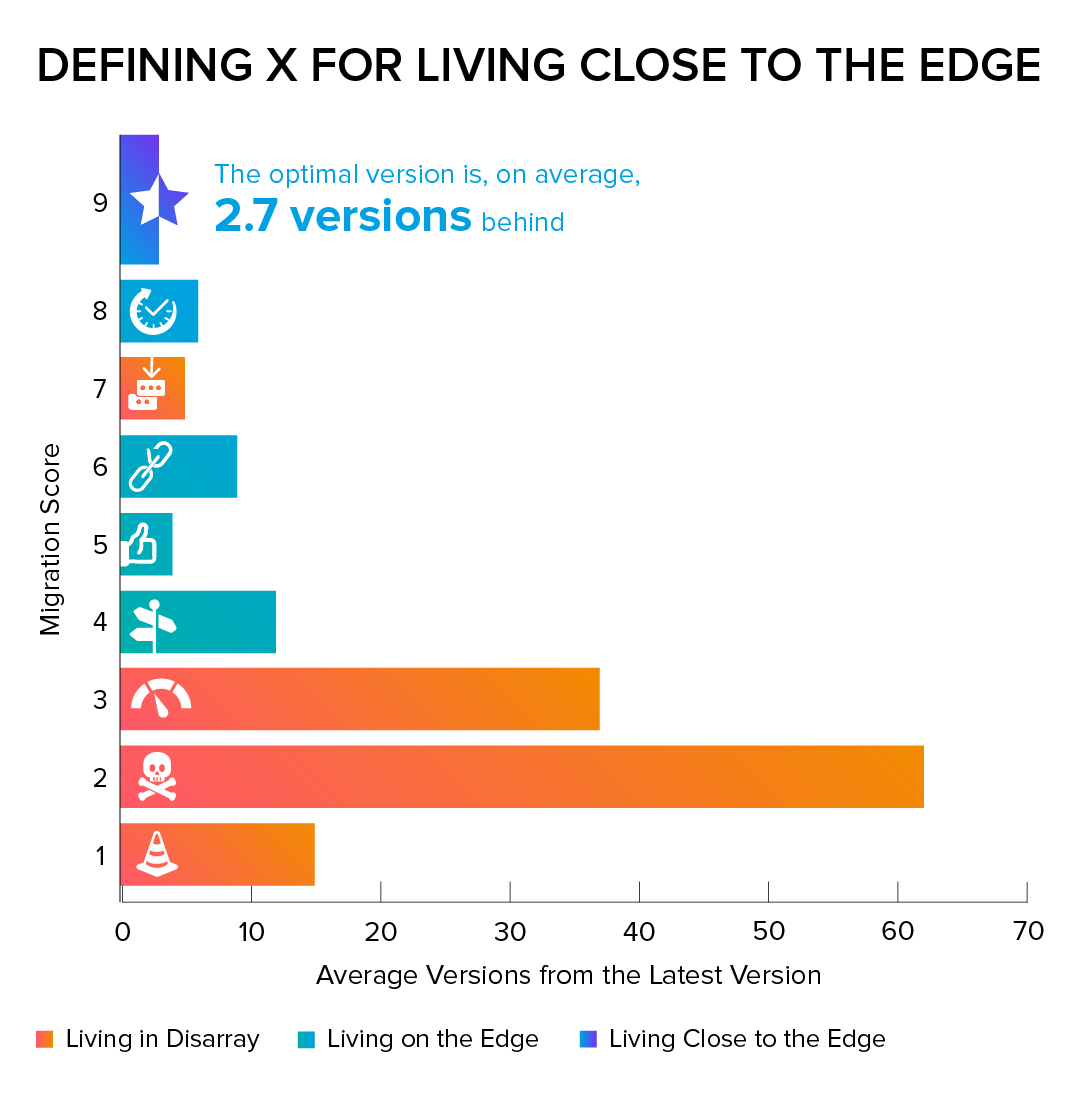

There are patching strategies one can follow - such as adopting a best practice we uncovered a few years ago in our research that the most optimal version of any package is 2.7 versions behind the latest release. In this case this would have helped to keep the package in a safe range.

There is tremendous naivete in the industry in assuming all code that is gained from reputable sources is safe forever. For the most part it absolutely is. However, when it is proven to NOT be, it is absolutely imperative that the organization be able to mount a coordinated patching effort in 48 hours or less, with full knowledge of the affected targets. Anything less is not to benefit from the learnings of such incidents in years past, and to ignore the fact that these types of attacks will become more frequent.

All of the risk may come from the open source code, and yes, effort to keep it safe should be made at the open source project level. However true that may be, another truth also exists: the RISK of that open code rests solely with the organization adopting it - directly or indirectly. Any method to minimize this risk should be undertaken, and pretty soon will be required by directives such as the NIS2 directive in the EU. This will require critical organizations to notify regulators of any such incident within 24 hours of discovery and supply them with a mitigation plan.

To achieve such monumental feats might seem daunting, but the solution is simple - to look inward at your process to collect the information necessary and automate the identification process. The fundamental element to be collected is a software bill of materials (SBOM) of every software, operating system and open source dependency bought, adopted, built or put into production, and to monitor these SBOMs in an automatic matter for ANY new vulnerability, issue and release and evaluate them against predetermined policy automatically. To this extent, our platform can help.

Merely blindly upgrading everything is a waste of energy which is why most organizations don't do it. Applying intelligent rules automatically when new findings are discovered helps organizations stay on top of their issues. It is for this fundamental reason we recently published our Sonatype SBOM Manager product - to help automate this process end to end.

This is not the first attack of its kind nor will it be the last one. It is a wake up call that we as an industry must rise up to the challenge and get better prepared. At the same time, we must support our maintainers. Our digital world depends on it.

Ilkka serves as Field CTO at Sonatype. He is a software engineer with a knack for rapid web-development and cloud computing and with technical experience on multiple levels of the XaaS cake. Ilkka is interested in anything and everything, always striving to learn any relevant skills that help ...

Explore All Posts by Ilkka Turunen

Discover a Better Way to SCA

Forrester evaluated 10 SCA providers and recognized Sonatype with the highest possible scores. Learn why Sonatype was named a leader in Forrester Wave™ for SCA.