Open Source Dependency Management: Trends and Recommendations

Open Source Dependency Management: Trends and Recommendations

Today's developer faces an extensive amount of choice and chores in development work that can overwhelm and lead to inefficient, tedious work when managing dependencies. Dependency management tasks are often derided in the development community and colloquially referred to as "Dependency Hell."

Many organizations set expectations that developers choose the best open source projects as components for software development. Unfortunately, a large percentage (85%) of projects on Maven Central are inactive, which are defined as less than 500 downloads per month. This makes selecting the best option for a given project even more difficult.

In addition, the average Java application contains 148 dependencies, compared to 128 last year. The average Java project releases updates 10 times a year. So, along with choosing and managing 150 initial dependencies, developers are being asked to:

Along with choosing and managing 150 initial dependencies, developers are being asked to:

- Track an average of 1,500 dependency changes per year per application

- Possess significant security and legal expertise to choose the safest versions

- Maintain a working knowledge of software quality at all times

- Understand the nuances of ecosystems being used

- Sift through thousands of projects to pick the best ones

All this is expected in addition to their regular jobs of producing new software — and often with poor or no support to help in the myriad of decisions that must be continuously made. It is an overwhelming tide of information and responsibility, especially when we as an industry are asking for development teams to step on the pedal and go ever faster. Clearly, tasking the developer with a tsunami of options and limited tools creates tremendous inefficiencies on the one hand, while very likely putting the enterprise at risk on the other.

Micro Decision Making

In the 2021 State of the Software Supply Chain report, we dove into the differences between micro and macro architectural decisions when it comes to developing software. We also took an in-depth look at the wide variety of upgrade decisions made by engineering teams to maintain a secure and healthy Software Bill of Materials (SBOMs), and found that 69% of all upgrade decisions were suboptimal. Our analysis this year found similar results.

0

of all upgrade decisions were suboptimal.

Figure 3.1. Five Groups of Migration Decisions

This year, we're focusing on micro decisions – also known as version management – chosen after developers have made the macro decisions about which projects to build upon. These decisions are relative to the version of a dependency that developers choose to adopt and can vary widely between major and minor versions of the same library. We continue to explore upgrade decisions made by development teams and determine patterns of behavior, once again asking: how should these decisions be made at scale?

- Should companies expect software developers to intuitively know the right action to take?

- What are the benefits of making good decisions?

- What are the costs of making bad decisions?

Do engineering leaders have a responsibility to equip developers with information designed to automate better decision making?

Methods of Analysis

To understand the quality of current dependency management decisions, and to answer these questions, we studied 131+ billion Maven Central downloads, 185,000 key enterprise applications, and analyzed migration patterns in the Java ecosystem.

In support of our research, in the 2021 Report we developed a scoring algorithm designed to measure the relative quality of dependency migration decisions. This version choice algorithm was derived from 8 common-sense rules distilled from the insights in the previous 7 reports we've done on the topic.

Figure 3.2. Eight Rules for Upgrading to the Optimal Version

Avoid Objectively Bad Choices

Don't choose an alpha, beta, milestone, release candidate, etc. version.

.png)

Don't upgrade to a vulnerable version.

.png)

Upgrade to a lower risk severity if your current version is vulnerable.

.png)

When a component is published twice in close succession, choose the later version.

Avoid Subjectively Bad Choices

.png)

Choose a migration path (from version to version) others have chosen.

.png)

Choose a version that minimizes breaking code changes.

.png)

Choose a version that the majority of the population is using.

.png)

If all else is tied, choose the newest version.

Overall Findings

- Proactive vs. Reactive - When upgrading, choosing the optimal version from within the Proactive version range and only upgrading when necessary is the best option for security and cost savings.

- Consumers vs. Maintainer - Open source consumers are responsible for the majority of risk.

- Supply Chain Management - Using the infamous Log4j and five other comparable exploits as a case study, we've found that software supply chain management is a requirement to tackle this problem.

Finding 1 — Proactive vs. Reactive

Two core behavior groups define upgrades: proactive and reactive.

A proactive approach in dependency management is important because, as the adage goes, software ages like milk, not wine. Sitting in the reactive zone is not only suboptimal from a security standpoint, but it puts you at an immediate disadvantage and penalizes those development teams when an issue arises. Based on the patterns analyzed, teams who made upgrade decisions into the proactive zone are in a far better position for making the best choice. However, there are selections within this group that are more efficient.

To further understand proactive vs. reactive behaviors, we consider what kinds of teams are at the root of this behavior in the Dependency Management Insights section below.

Dependency Management Insights

Patterns of Behavior

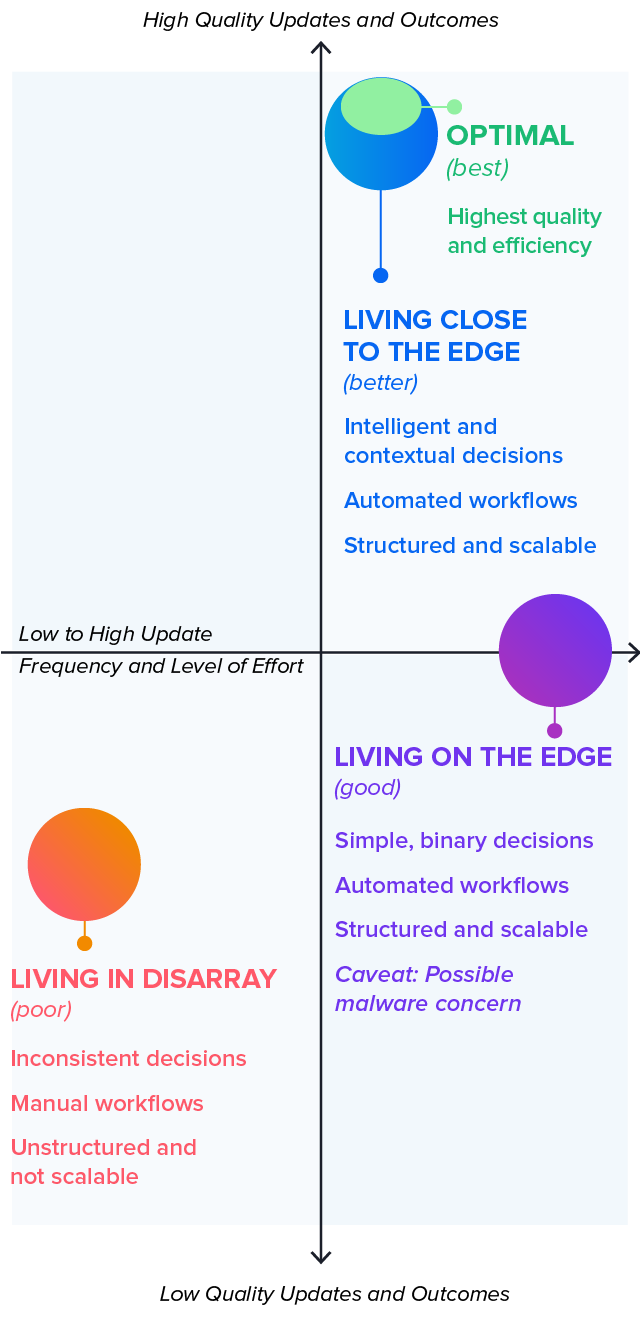

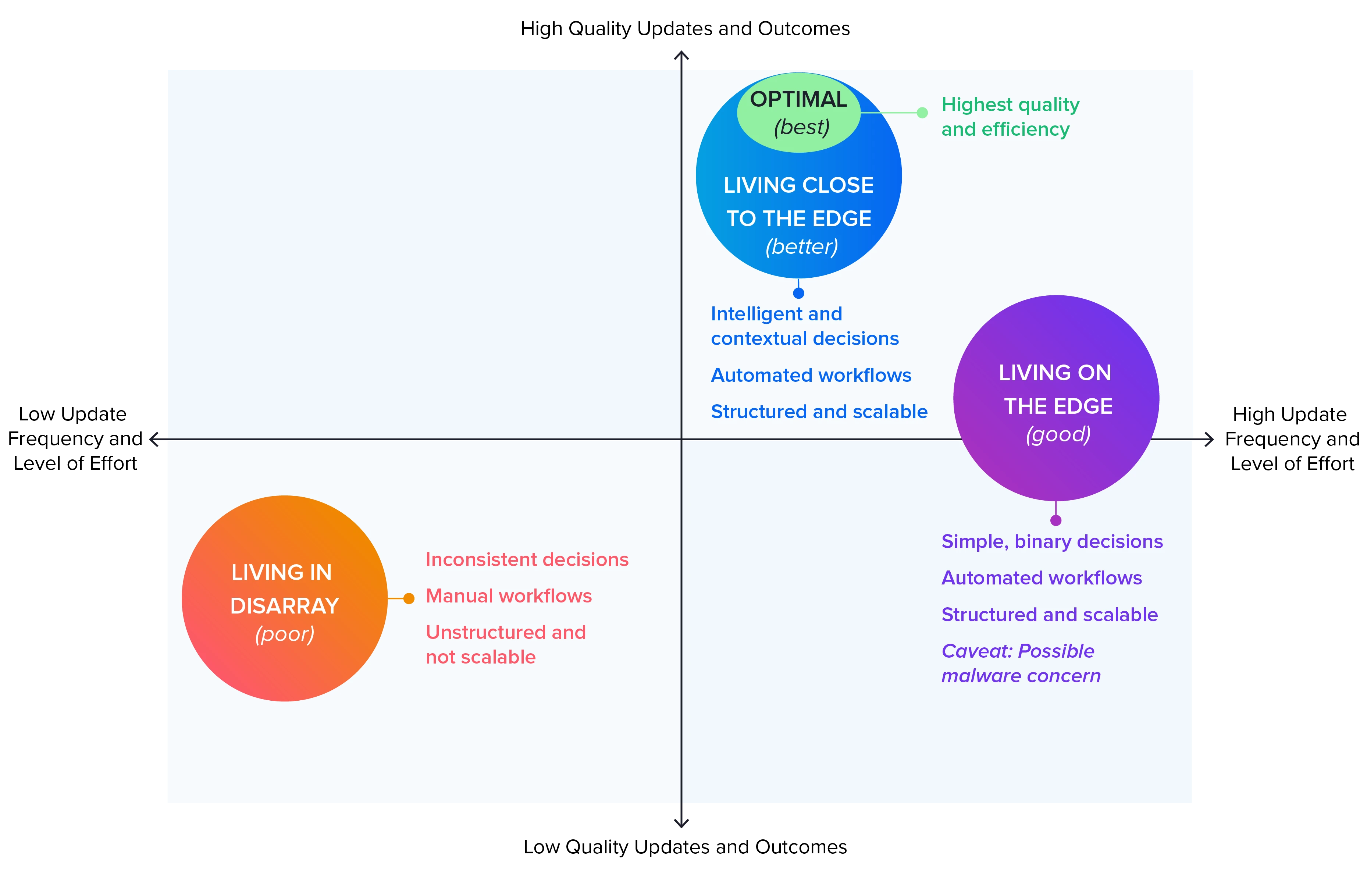

In 2021, we defined three patterns of dependency management behavior to help define and recommend a balanced approach for development teams. The best teams never work with ancient versions nor are they on the bleeding edge, Instead, they are merely close to the edge. This year, we've enhanced the best option: Optimal–seen below.

Sitting in the reactive zone is not only suboptimal, but it puts you at an immediate disadvantage and penalizes those development teams when an issue arises.

Patterns of Dependency Management Behavior

Teams Living in Disarray

Developers working on these teams lack automated guidance. Dependency updates are infrequent. When updates do occur, it is usually reactive to some external event, such as a security vulnerability being discovered or there is version deprecation. This commonly leads to suboptimal decisions being made. This approach to dependency management is highly reactive, wasteful, doesn't scale, and leads to stale software with elevated technical debt and increased security risk.

These groups are fundamentally reactive. If there was a group driving without a seat belt, it's here.

Teams Living on the Edge

Developers working on these teams benefit from simplistic but non-contextual automation. Dependencies are automatically updated to the latest version, whether optimal or not. Such automation helps to keep software fresh, but it can inadvertently expose software to malware and attacks on the software supply chain, including namespace confusion. An overly active upgrade approach carries higher costs and broken builds, derailing teams from spending time on feature work.

This approach is proactive and scalable, but not optimal in terms of expense or outcomes.

Teams Living Close to the Edge

Developers working on these teams have the advantage of intelligent and contextual automation. Dependencies are automatically recommended for updating, but only when necessary. This type of intelligent automation keeps software fresh without inadvertently introducing wasted effort or increased security risk.

This approach is proactive, scalable, and a major improvement concerning cost efficiency and quality outcomes.

Optimal

A sub-group of Teams Living Close to the Edge. This is the best option for development teams and is determined by following a ruleset detailed above in Figure 3.2: Eight Rules for Upgrading to the Optimal Version.

All of these strategies for dependency management can be seen in their place on an axis of update quality and frequency, shown below.

Figure 3.3. Strategies for Dependency Management

Decision Quality

Teams Living on the Edge are still doing dramatically better than those Living in Disarray. Any teams worried that they are outdated or vulnerable can improve their position by focusing purely on being up-to-date. These teams would move out of the “Poor” and into the “Good” category below.

Figure 3.4. Update Management Decisions

However, because Teams Living on the Edge automatically update their dependencies to the latest version–whether optimal or not–they are still vulnerable to malicious attacks on the software supply chain. Teams can reduce risk, save time, and save money by merely being close to the edge. It therefore makes sense to choose software that is an average of 2.7 versions behind the latest. These update decisions are in the “Better” category.

Finally, the “Best” option further determines the very ideal decision, resulting in the safest direction with the fewest update cycles.

Teams can reduce risk, save time, and save money by merely being close to the edge.

Efficient Upgrading

Individual version upgrades should be at the very least aimed at the proactive zone, but the optimal version is the best choice and over time leads to fewer required upgrades. The time and effort is of course a cost savings, but it also frees up developers from handling the sometimes tedious and boring maintenance task. Teams can instead focus on creative software development, improving morale and delivering new value.

Last year we discussed issues with imperfect upgrade choices that result in over-frequent version updates. Without analysis of ideal upgrade options, teams will upgrade multiple times when only one update was necessary.

Since only 31% of upgrade decisions were found to be optimal, it is easy to see how much time and effort developers could save by consistently making better upgrade decisions.

By performing this kind of analysis before making an upgrade decision, teams can make sure they are always updating to the Optimal version, significantly reducing the total upgrades over time.

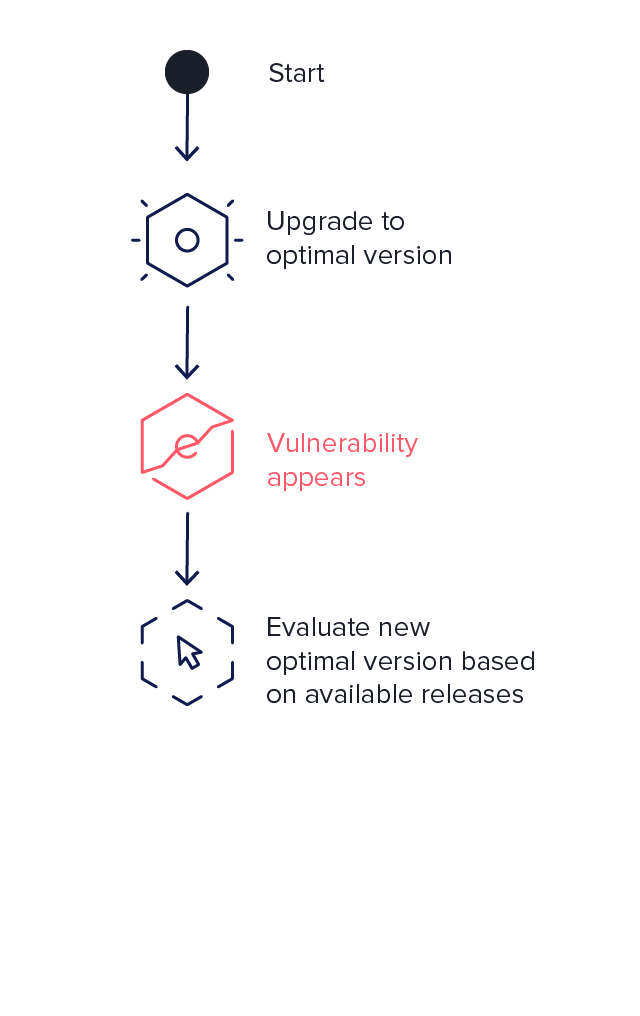

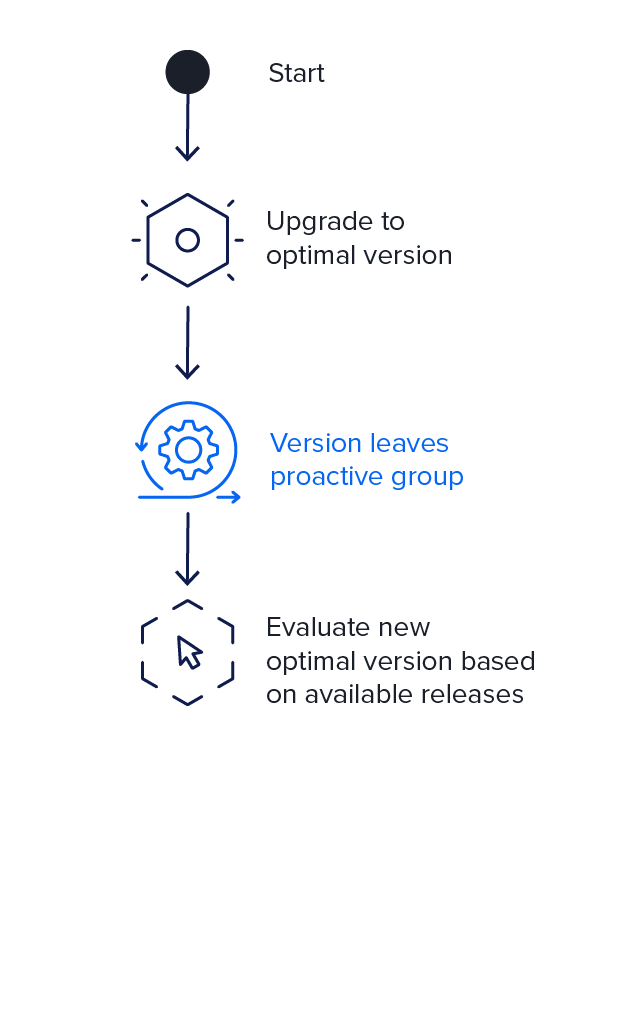

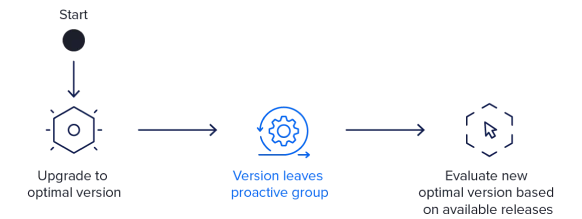

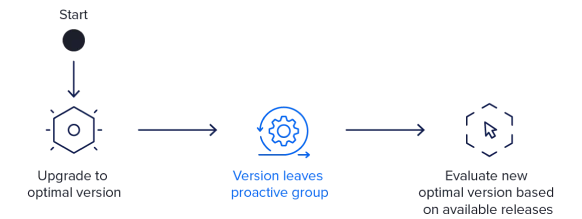

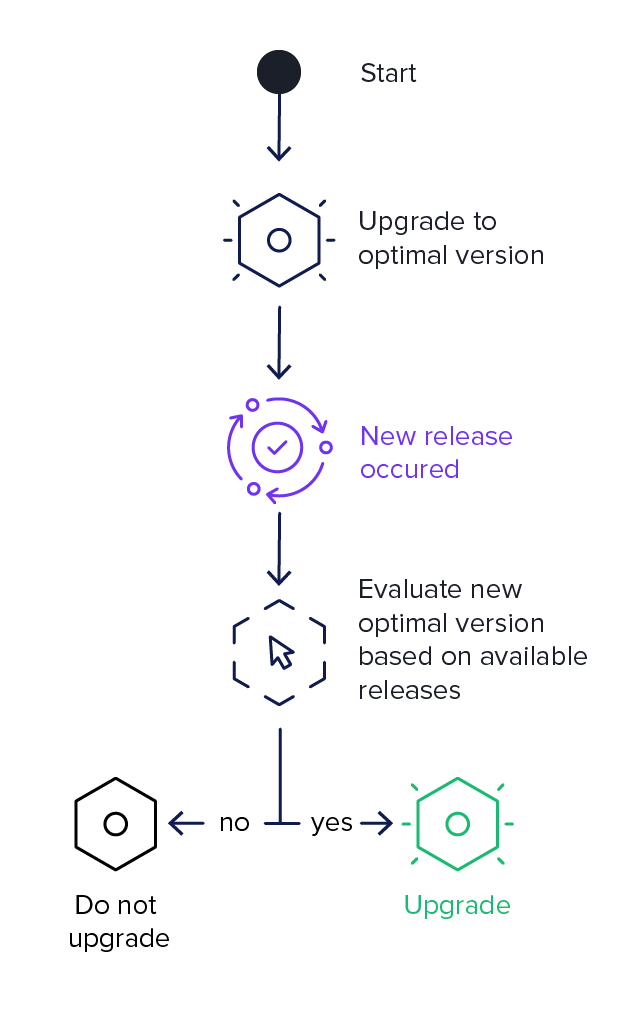

Optimal Upgrade Frequency

Because the dependency version considered “Optimal” changes over time, some teams could be tempted to upgrade to evaluate each new version that appears for Optimal status. It might seem ideal to always stay within the Optimal zone, so they will immediately upgrade according to the eight rules described in the Methods of Analysis section. However, because this “Optimal” status is so short-lived, it may result in many updates and unnecessary extra work.

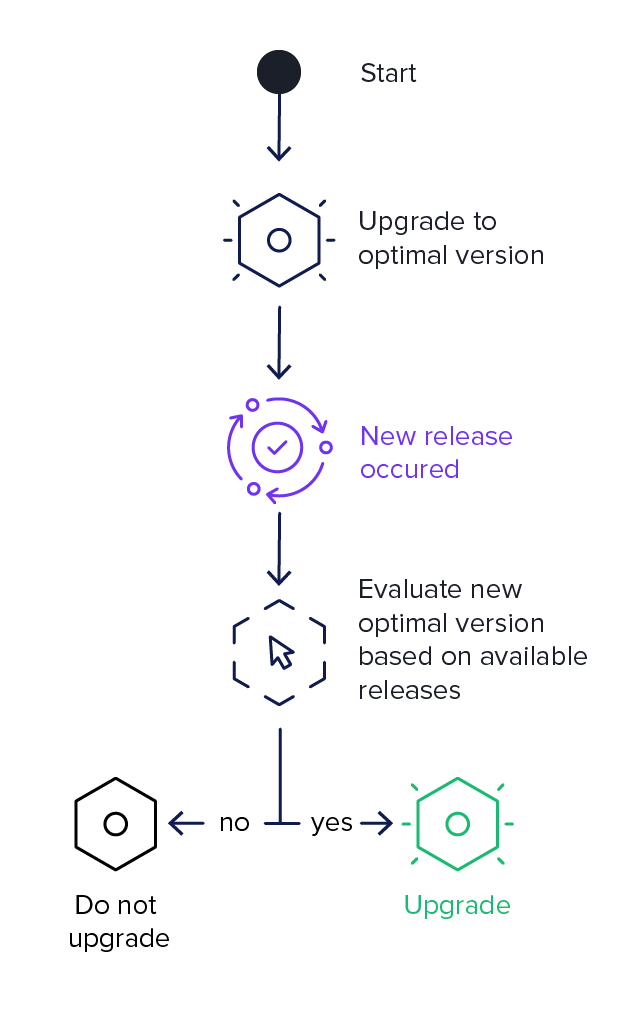

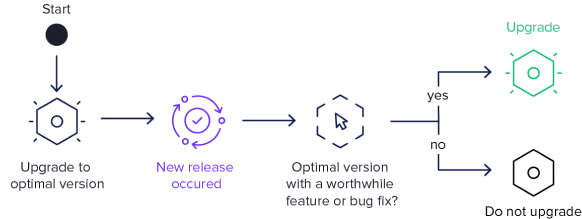

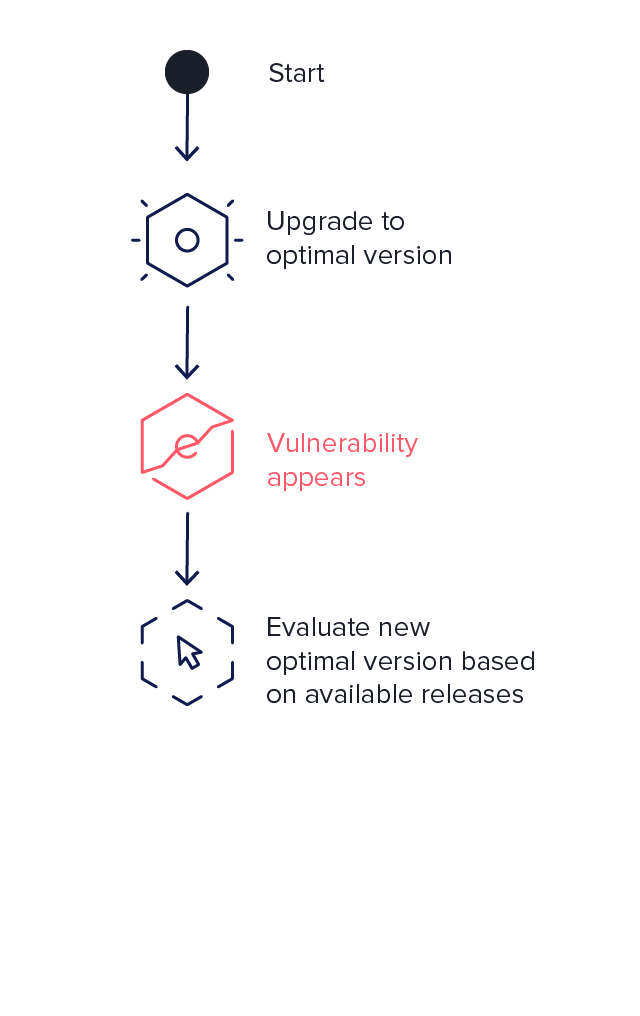

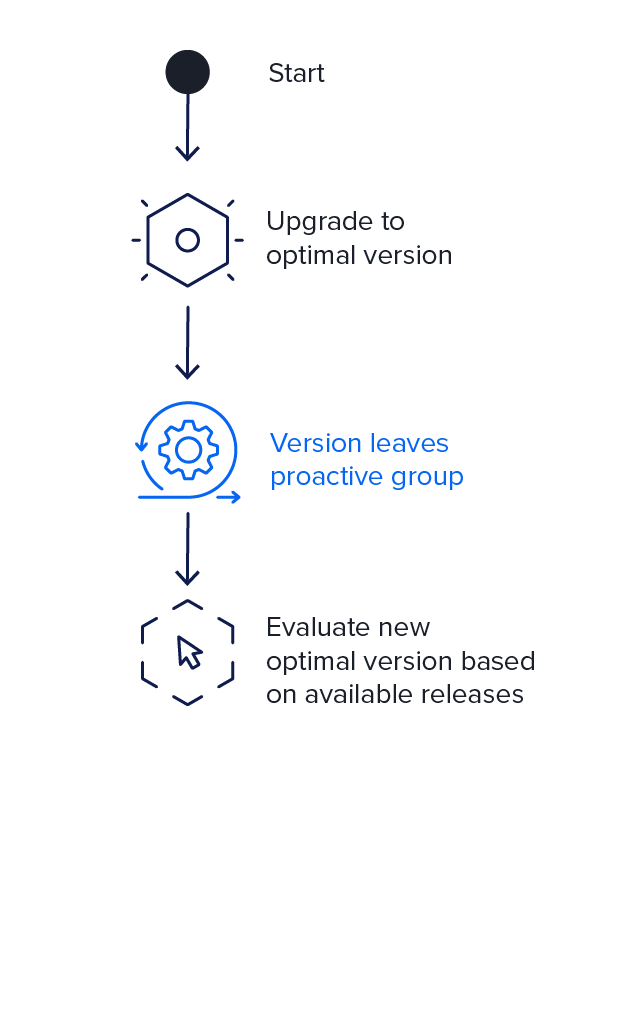

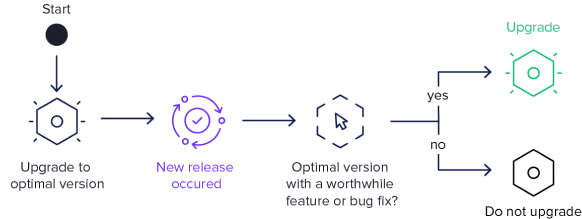

The best option for managing the shifting sands of new dependency versions is to wait until your current version meets one or more of the conditions below in Figure 3.5:

Figure 3.5. Dependency Management Triggers

Smarter Upgrade Timing Saves Money

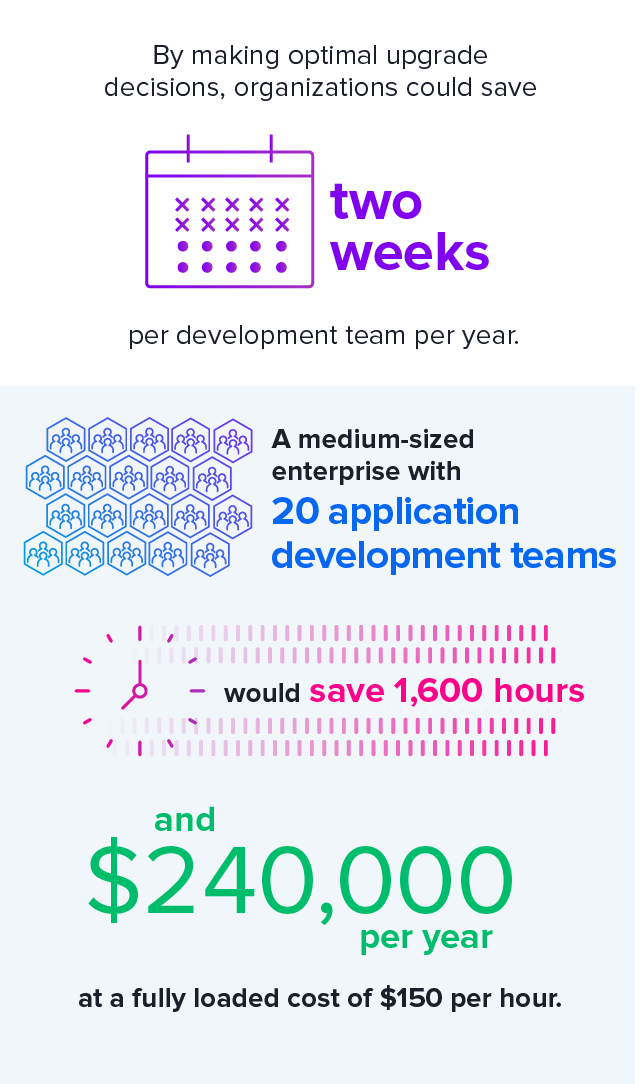

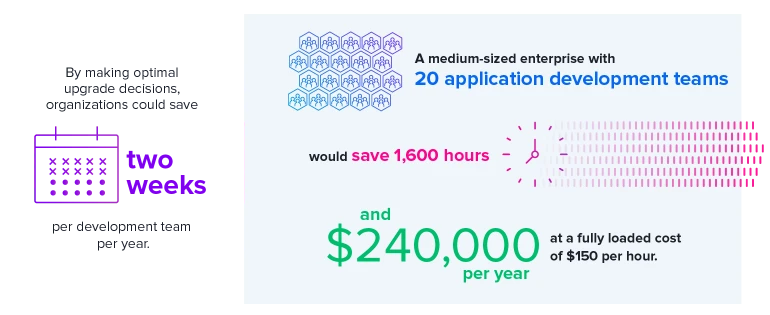

Reducing your total upgrades may seem like a risky proposition, but moving to safe versions and staying within that group as long as possible carries major cost savings. Individual update steps take on average 4 hours or approximately 80 hours per application per year. These are tasks that are accomplished by a skilled developer. By delaying upgrades until necessary, you can cut this development task in half.

For a medium-sized enterprise with 20 application development teams, this could mean two additional development weeks per year per application as noted in Figure 3.6.

Figure 3.6. Time Saved with Optimal Upgrade Decisions

For individual teams, choosing the optimal version means you select the best balance of safety and efficiency.

Finding 2 — Consumer vs. Maintainer

After answering how to mitigate dependency management risk, we looked at who was creating the most risk.

As open source supply chain incidents have increasingly made their way into global headlines, questions about where security failures originate have surfaced again and again. Much attention has been paid to open source projects and their maintainers, often labeled as being irresponsible or unwilling to update their software. But who is really to blame?

According to Maven Central repository download data, open source consumers are proliferating the majority of open source risk. Immature consumption behavior is at the root of this – if we change behavior, enormous risk is immediately eliminated from the industry. More than that, there are solutions available today that help solve this problem. As seen in Figure 3.7, given the right tools, consumers can change their behaviors and greatly reduce their consumption of open source risk. Enterprises using software supply chain management solutions (Sonatype Nexus Lifecycle in this instance) have noted a 22.6% risk reduction.

Consumption behavior is at the root of this – if we change behavior, enormous risk is immediately eliminated.

However, these tools are not in widespread use, mostly because of a lack of awareness and perhaps an assumption that such solutions will impair development productivity. Interestingly, the opposite is true. With the right solutions implemented the right way, there is an opportunity for material productivity gains in conjunction with risk reduction.

Figure 3.7. Comparison of Mature vs. Immature Consumption Behavior Over Time

Weeks since vulnerability

Source: Maven Central download statistics and a sampling of enterprise customers (Sonatype Nexus Lifecycle)

From the OpenSSF's Open Source Software Security Mobilization Plan to the establishment of community funds for maintainers, we continue to see most open source risk solutions focus heavily on maintainers. However, this one-pronged approach will only help solve part of the problem. While securing production is an excellent first step and very important, even more urgent is the need to secure consumption and raise awareness of the benefits.

What Did We Analyze?

We analyzed how the world consumes open source from Maven Central across 131+ billion downloads over the year. We compared consumers downloading vulnerable dependencies without a fixed version to vulnerable dependencies where a fixed version was available but not chosen..

Figure 3.8. Consumer vs Maintainer of Vulnerable Downloads

How Common Are Vulnerable Downloads?

From the 8.6 billion monthly downloads, 1.2 billion vulnerable components were consumed or 14% of all downloads are vulnerable.

How Common Are Fixed Vulnerabilities?

We found that 55 million or 4.5% of the vulnerable dependencies were due to a vulnerable version with no available fix – meaning 95.5% of known-vulnerable downloads had a non-vulnerable option available. That means 1.18 billion avoidable vulnerable dependencies are being consumed each month.

Consumers of these no-fix-available projects were selecting these versions as they had no other choice. Despite the low number of vulnerable versions with no alternatives, a great majority of vulnerable versions that have a fix are still being downloaded.

How Common Are Vulnerable Releases?

Could the sheer volume of vulnerable releases be causing developers to choose vulnerable dependencies? The fact that a monthly average of 1.2 billion billion vulnerable dependencies were downloaded is a very big problem. But, 1.2 billion vulnerable downloads does not equate to 1.2 billion vulnerable releases. How many vulnerable releases does that number actually represent?

There are approximately releases available for download in the Maven Central Repository. According to our data, only 35% of those releases (3.5 million) included a known-vulnerable issue. Of the vulnerable releases, only 4.2% (147,000) had no available fix.

This means that 95.8% of vulnerable downloaded releases had a fixed version available.

Because the total releases with no fix available are a drop in the bucket compared to total vulnerable releases, we cannot assume these vulnerable releases are to blame. Could that 4.2% be improved? Certainly. However, our analysis shows that consumers are disproportionately selecting vulnerable versions when a fixed version is available.

How Many Poor Choices Are Being Made?

We now know that this very big problem comes from a relatively small number of releases. But, how many people are actually culpable in the problem space? There are approximately 26 million developers – or “consumers” – around the world. According to our data, around 14.4 million of those consumers are downloading vulnerable dependencies. Of this group, 5.7 million downloaded a dependency with no fix available–meaning 8.7 million consumers had a fixed choice available to them but still chose a vulnerable version.

Even if a fixed version is fix available, meaning perhaps as a result of maintainers better protecting OSS projects–14.4 million consumers are still choosing a compromised version instead. Clearly, we cannot solve the issue of open source security without consumers changing their behaviors.

Why Are Consumers Making Poor Choices?

Why have 8.7 million open source consumers chosen vulnerable versions over non-vulnerable versions? Though there can be reasons for deliberately using vulnerable versions, they should be very rare. For example, using a vulnerable version with no fix available to avoid a critical code break, or if you have a mitigating control in place. If the project maintainer has not fixed the vulnerability, it's time to change the underlying technology. Unfortunately, changes at that level are no easy task, requiring a lot of time, consideration, and investment. Things organizations and engineering teams may not prioritize over speed or efficiency.

We're continuing to explore why this may be happening, but we've seen a few themes emerge over the years:

Possible Explanations for Poor Component Choice

Popularity - When deciding which dependencies to use in a development project, popularity is often used as a proxy for quality (i.e., “everyone else is using it, so it must be safe, secure, and reliable”). Theoretically, this makes sense as, more popular projects should be getting fixed faster. But they aren't. As revealed in our 2019 State of the Software Supply Chain Report, the popularity of a dependency does not correlate with a faster median update time. Developers may feel safe in selecting more popular projects, but just because a dependency is popular, doesn't necessarily mean it's “better.”

Clarity - Oftentimes, developers aren't manually selecting individual versions when building software supply chains &ndash those dependencies are already part of a project that's being used or built upon. As cited in the 2020 State of the Software Supply Chain Report, 80-90% of modern applications consist of open source software. If an SBOM and proper DevSecOps practices are not implemented, developers and software engineering teams may have no way of knowing that those vulnerable components are being used, pulled, or built upon.

Automation - Though there are plenty of open source automation tools, very few have security capabilities built in. Similar to the Clarity issue above, this automation may mask potential vulnerable dependencies, enabling developers to unknowingly build upon projects with known vulnerabilities.

Inactive Releases - There are almost 500,000 projects within Maven Central, but only ~74,000 of those projects are actively used. That means 85% of projects are sitting in this repository and taking up space, potentially overwhelming developers with available options.

As seen in Figure 3.8 above, removing the small percentage of no-fix-available releases (in blue on the left) would only remove 40% of the no-fix-available issues visible (in blue on the right). This is because – even if there were zero options with no available fix – consumers are still downloading vulnerable versions.

Since only a small percentage of the problem stems from open source project maintainers, the focus must shift. The most significant and persistent risks are owned by consumers, who need solutions to consistently choose safe versions.

Finding 3 — Log4j Case Study

Log4j Case Study

In late 2021, a serious vulnerability surfaced in a widely-used open source logging framework–Log4j. The flaw impacted almost every Java-based software application from Minecraft to Tomcat. This flaw and its history underline many issues we've discussed so far:

Dependency Update Issues Arising During Log4j

Maintainer vs. consumer

Although the maintainer quickly released an update, consumers were slow to react.

Sluggish updates

Despite the update process requiring little effort (the changes were non-breaking), shifting to the safe versions has not been universal, with successful Log4j attacks still happening.

Organizations can prioritize critical issues

How did the industry react to the high profile Log4j critical notice versus other, less publicized vulnerabilities?

Response by Log4j's Open Source Maintainers vs. the Community

As discussed in Finding 2, open source project maintainers certainly bear some responsibility. However, Log4j is a strong example of rapid, effective response by project maintainers.

By all accounts, the Log4j project maintainers took reasonable and productive steps to resolve the issue at its source. This included fast tracking updates and handling the public disclosure in the best possible way, especially given the early and improper disclosure of the CVE. Despite this quick turnaround by the maintainers, our data shows poor adoption in the weeks and months that followed.

The data in Figure 3.9 below shows downloads of safe vs. vulnerable versions on Maven Central in the weeks that followed discovery:

Figure 3.9. Maven Central downloads of vulnerable vs. safe versions of Log4j

Here we see an example of open source consumers not taking advantage of the initiative and speed of project maintainers. Again, the choice of selecting safe versions is fully in the hands of consumers.

Our analysis of the vulnerability months after the disclosure shows that the lack of movement in this space remains an ongoing problem. Log4j exploits have continued to appear in the news.

Fortunately, our analysis of safe version adoption among enterprise consumers has been more encouraging thanks to available automation and tooling.

Again, the choice of selecting safe versions is fully in the hands of consumers.

Companies Can Prioritize Severe Issues

It was clear from the publicity surrounding the Log4j issue that organizations can and will prioritize critical vulnerabilities. Whether it was external pressure that affected leadership (top-down) or broad acknowledgment at the developer level (bottom-up) likely varies by individual groups and development cultures.

What is unclear is how vulnerabilities are prioritized. Although a top-priority CVE may get published and distributed, our data suggests that not every critical issue gets the same attention and energy.

Our analysis of three critical priority vulnerabilities show varying fix rates from discovery. In Figure 3.10 below, the graph lines represent scan data from over 185,000 anonymized applications. Here, a high percentage means a lower distribution of vulnerable versions, resulting in better security.

Figure 3.10. Enterprise Response Over Time of Critical Vulnerabilities Based On Media Coverage

What we saw may represent an outsized influence by press to software flaws. Log4j (in blue) received tremendous press while SpringShell in green got some press. The last critical CVE in red received almost no press.

What seems clear is that no one wanted to get caught off guard by the biggest issue of 2021, and that organizations were able to respond and reduce their usage of vulnerable dependencies.

A serious concern is that two successive critical vulnerabilities may overload the press, with the second issue getting far less attention and potentially reduced fix rates. If the SpringShell vulnerability had been discovered earlier, could it have changed places with Log4j in our analysis?

Preparing for the Next Log4j

Every team has to behave reactively when crucial security issues appear. Even if you and your team have achieved and maintain a proactive status as described in Finding 1, it is inevitable that you will have to react to vulnerabilities. How will you react? What is your team's response?

This year's findings reiterate that if organizations have tooling, policy, and automation in place that maintains their place in the proactive group, their reaction should be business as usual. And as we will see in the next section, working at organizations where security issues are treated as normal defects has tremendous implications for job satisfaction.